Cloud Service Provider

for Developers and Teams

whether you have one virtual machine or ten thousand.

Hostman's commitment to simplicity

and budget-friendly solutions

monthly charges

monthly charges

base daily/weekly fee

monthly/hourly fee

10 TB / mo

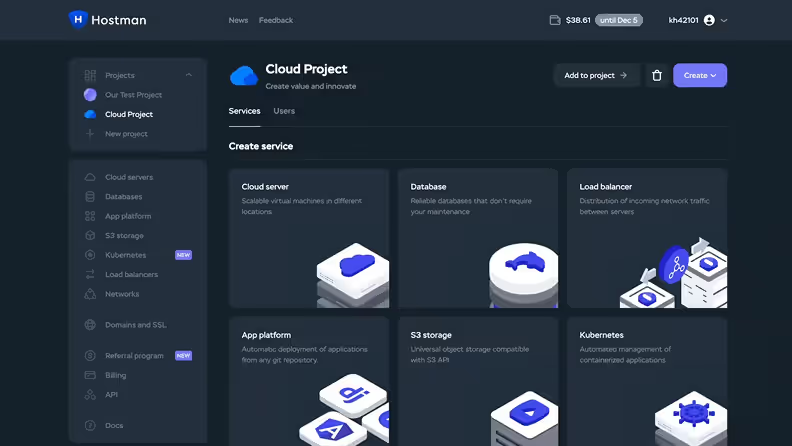

Robust cloud services for every demand

Cloud Servers

Databases

App Platform

Kubernetes

Firewall

Managed Backups

Images

Hear it from our users

"Hostman Comprehensive Review of Simplicity and Potential"

"A perfect fit for everything cloud services!"

"Superb User Experience"

"Streamlined Cloud Excellence!"

"Seamless and easy to use Hosting Solution for Web Applications"

"Availing Different DB Engine Services Provided by Hostman is Convenient for my Organization usecases"

"Hostman is a great fit for me"

"Perfect website"

"Simplifying Cloud Deployment with Strengths and Areas for Growth"

"Streamlined Deployment with Room for Improvement"

Deploy a cloud server

in just a few clicks

Global Infrastructure, Local Speed

If your ideal location isn't listed, feel free to request-your needs become our goals.

Latest News

How to Set Up an FTP Server on Windows Server 2019

Installing and Configuring Zabbix on Ubuntu 22.04

Configuring Samba on Debian

Enabling and Configuring IPv6: Full Tutorial

How to Install Node.js and NPM on Ubuntu 24.04

Dedicated Servers Are Now Available on Hostman

Top Alternatives to Speedtest for Checking Your Internet Speed

IT Cost Optimization: Reducing Infrastructure Expenses Without Compromising Performance

Apache Kafka and Real-Time Data Stream Processing

VMware Cloud Director: What It Is and How to Use It

Answers to Your Questions

Hostman is a cloud platform where developers and tech teams can host their solutions: websites, e-commerce stores, web services, applications, games, and more. With Hostman, you have the freedom to choose services, reserve as many resources as you need, and manage them through a user-friendly interface.

Currently, we offer ready-to-go solutions for launching cloud servers and databases, as well as a platform for testing any applications.

-

Cloud Servers. Your dedicated computing resources on servers in Germany, the USA, and the Netherlands. Soon, we'll also be in Singapore, Egypt, and Nigeria. We offer 25+ ready-made setups with pre-installed environments and software for analytics systems, gaming, e-commerce, streaming, and websites of any complexity.

-

Cloud Databases. Instant setup for any popular database management system (DBMS), including MySQL, PostgreSQL, Redis, Apache Kafka, and OpenSearch.

-

Apps. Connect your Github, Gitlab, or Bitbucket and test your websites, services, and applications. No matter what framework are you using—React, Angular, Vue, Next.js, Ember, or any other—we probably support it on our app platform.

Your data's security is our top priority. Only you will have access to whatever you host with Hostman.

Additionally, we house our servers in Tier III data centers, representing the pinnacle of reliability available today. Furthermore, all data centers comply with international standards:

-

ISO: Data center design standards

-

PCI DSS: Payment data processing standards

-

GDPR: EU standards for personal data protection

User-Friendly. With Hostman, you're in control. Manage your services, infrastructure, and pricing structures all within an intuitive dashboard. Cloud computing has never been this convenient.

Great Uptime: Experience peace of mind with 99.98% SLA uptime. Your projects stay live, with no interruptions or unpleasant surprises.

Around-the-Clock Support. Our experts are ready to assist and consult at any hour. Encountered a hurdle that requires our intervention? Please don't hesitate to reach out. We're here to help you through every step of the process.

At Hostman, you pay only for the resources you genuinely use, down to the hour. No hidden fees, no restrictions.

Pricing starts as low as $4 per month, providing you with a single-core processor at 3.2 GHz, 1 GB of RAM, and 25 GB of persistent storage. On the higher end, we offer plans up to $75 per month, which gives you access to 8 cores, 16 GB of RAM, and 320 GB of persistent storage.

For a detailed look at all our pricing tiers, please refer to our comprehensive pricing page.

Yes, our technical specialists are available 24/7, providing continuous support via chat, email, phone, and WhatsApp. We strive to respond to inquiries within minutes, ensuring you're never left stranded. Feel free to reach out for any issue—we're here to assist.

With Hostman, you can scale your servers instantly and effortlessly, allowing for configuration upsizing or downsizing, and bandwidth adjustments.

Please note: While server disk space can technically only be increased, you have the flexibility to create a new server with less disk space at any time, transfer your project, and delete the old server.

Hostman ensures 99.98% reliability per SLA. Additionally, we house our servers exclusively in Tier III data centers, which comply with all international security standards.

Just sign up and select the solution that fits your needs. We have ready-made setups for almost any project: a vast marketplace for ordering servers with pre-installed software, set plans, a flexible configurator, and even resources for custom requests.

If you need any assistance, reach out to our support team. Our specialists are always happy to help, advise on the right solution, and migrate your services to the cloud—for free.

Hostman guarantees a 99.98% server availability level according to the SLA.

Do you have questions,

comments, or concerns?

whether you need help or are just unsure of where to start.