Top Cloud Servers

with instant scalability, powerful hardware,

and simple control.

& NVMe Disks

Unlimited Traffic

& Support

Management

Hostman's commitment to simplicity

and budget-friendly solutions

monthly charges

monthly charges

base daily/weekly fee

monthly/hourly fee

10 TB / mo

Cloud server pricing

Deploy any software in seconds

What is a cloud server?

Ready to buy a cloud server?

Efficient tools for your convenient work

Backups, Snapshots

Firewall

Load Balancer

Private Networks

Trusted by 500+ companies and developers worldwide

Recognized as a Top Cloud Hosting Provider

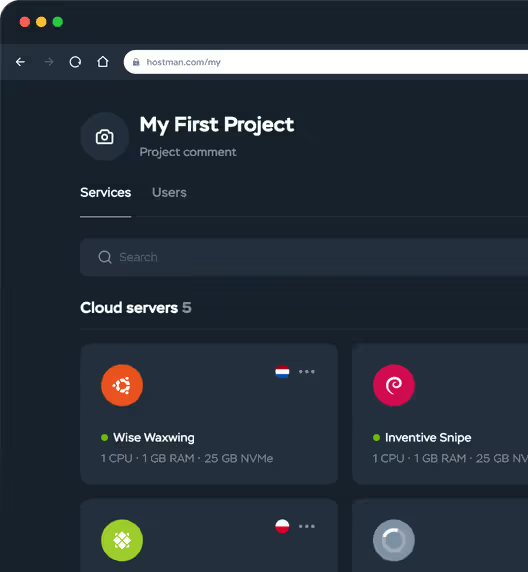

One panel to rule them all

Global Infrastructure, Local Speed

If your ideal location isn't listed, feel free to request-your needs become our goals.

Hear it from our users

"Hostman Comprehensive Review of Simplicity and Potential"

"A perfect fit for everything cloud services!"

"Superb User Experience"

"Streamlined Cloud Excellence!"

"Seamless and easy to use Hosting Solution for Web Applications"

"Availing Different DB Engine Services Provided by Hostman is Convenient for my Organization usecases"

"Hostman is a great fit for me"

"Perfect website"

"Simplifying Cloud Deployment with Strengths and Areas for Growth"

"Streamlined Deployment with Room for Improvement"

More cloud services from Hostman

Managed Databases

Apps

Kubernetes

Firewall

Cloud Servers Tutorials

Setting Up NTP on a Server: A Step-by-Step Guide

How to Install VNC on Ubuntu

How to Migrate From Zapier to n8n and Organize n8n Workflows

Deploying and Configuring Keycloak

How to Correct Server Time

How to Set Up Network Storage with FreeNAS

Server Hardening

How to Use SSH Keys for Authentication

How to Protect a Server from DDoS Attacks

How to Protect a Server: 6 Practical Methods

Tailored cloud server

solutions for every need

General-purpose cloud servers for web hosting

High-performance servers for cloud computing

For businesses needing powerful resources for tasks like AI, machine learning, or data analysis, our high-performance cloud servers are built to process large datasets efficiently. Equipped with 3.3 GHz processors and high-speed NVMe storage, they ensure smooth execution of even the most demanding applications.

Storage-optimized cloud servers for data-driven operations

Memory-Optimized Servers for Heavy Workloads

These servers are built for applications that require high memory capacity, such as in-memory databases or real-time analytics. With enhanced memory resources, they ensure smooth handling of large datasets, making them ideal for businesses with memory-intensive operations.

In-depth answers to your questions

A Hostman cloud server is a virtual machine hosted in our Tier III data centers with guaranteed resources (CPU, RAM, NVMe storage) and full root access. Unlike traditional hosting, your project isn’t tied to one piece of hardware — resources are pooled in the cloud, so you get flexibility, performance, and reliability from day one.

With Hostman you get:

-

Scalability on demand – upgrade your server resources instantly as your project grows.

-

High performance – NVMe storage and enterprise-grade infrastructure ensure maximum speed.

-

99.9% uptime SLA – reliable cloud architecture keeps your business online.

-

Global coverage – choose data centers in strategic locations for lower latency to your users.

-

Transparent pricing – no hidden fees, pay only for what you actually use.

-

Full control – manage your server with root access and install any apps you need.

-

24/7 expert support – real people available anytime to solve your issues.

Plans range from 1 CPU core with 1 GB RAM to 8 CPU cores and 16 GB RAM, with fast NVMe SSDs and dedicated IPs.

You can Yes, scale your CPU, RAM, and bandwidth anytime through the control panel with hourly billing. add power, bandwidth, and channel width with just a few clicks directly in the control panel. With Hostman, you can enhance all the important characteristics of your server with hourly billing.

Pricing is flexible and billed hourly—you only pay for the resources you use.

Yes, contact support to request a test period and free project migration to the cloud.

Our servers are hosted in GDPR and ISO/IEC 27001 certified Tier III data centers with DDoS protection, daily backups, and 99.98% uptime according to the SLA.

Before deploying your server, you can configure SSH keys and disable password-based SSH access for enhanced security. Since only you control the security inside your server, including your applications, data, and configurations, we recommend setting up your own firewall rules, managing access, and keeping your software up to date.

24/7 support is available via phone and chat, with fast response times and expert help.

Yes, you have full control to install any OS, software, or custom image.

Hostman provides regular backups and an optional automatic backup service for extra protection.

Yes, we guarantee 99.98% uptime under a Service Level Agreement (SLA).

Cloud servers are hosted in reliable Tier III data centers in the EU and the US.

Yes, you can run and manage multiple cloud servers, databases, and VPS instances from one dashboard.

Servers launch instantly from the control panel, with software installation taking just minutes.

t Hostman, the right server depends on your project’s needs:

-

Websites and blogs → Start with a basic plan (1–2 vCPU, 2 GB RAM).

-

Databases, SaaS apps, e-commerce → Choose medium plans (2–4 vCPU, 4–8 GB RAM) for stable performance.

-

High-traffic apps, AI workloads, or enterprise projects → Go for advanced plans (8+ vCPU, 16+ GB RAM) with dedicated NVMe storage.

-

Still unsure? → Start with our 30-day free trial, test the performance, and scale up when you’re ready.

Yes, upgrade your specs anytime from the control panel, or downgrade with help from our support team.

Do you have questions,

comments, or concerns?

whether you need help or are just unsure of where to start.