MySQL Hosting from Hostman

What is MySQL?

Use Cases for MySQL Servers

Web Hosting & Applications

Content Management Systems

Customer Relationship Management

E-commerce Platforms

Business Intelligence & Analytics

Financial Systems

MySQL Hosting Prices For Any Case

Each plan includes free bandwidth

Why Tech Teams Choose Hostman

99.98% SLA uptime

Clear, pay‑as‑you‑go pricing

24/7 expert support

Hear it from our users

"Hostman Comprehensive Review of Simplicity and Potential"

"A perfect fit for everything cloud services!"

"Superb User Experience"

"Streamlined Cloud Excellence!"

"Seamless and easy to use Hosting Solution for Web Applications"

"Availing Different DB Engine Services Provided by Hostman is Convenient for my Organization usecases"

"Hostman is a great fit for me"

"Perfect website"

"Simplifying Cloud Deployment with Strengths and Areas for Growth"

"Streamlined Deployment with Room for Improvement"

Trusted by 500+ companies and developers worldwide

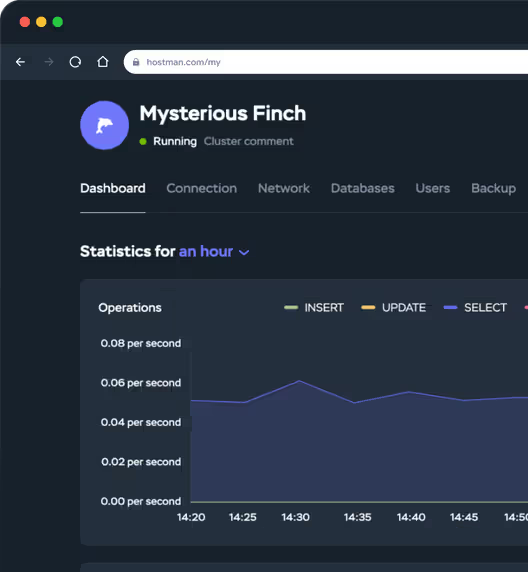

One Panel to Manage

Your MySQL Hosting

Need help getting started? Learn how to create database clusters on Hostman in just a few clicks—no server admin skills required.

Everything is ready to deploy

your MySQL database to our

cloud — up and running

in seconds!

Databases for all tastes

MySQL

PostgreSQL

Redis

OpenSearch

ClickHouse

Kafka

RabbitMQ

Documentation

Global Infrastructure, Local Speed

If your ideal location isn't listed, feel free to request-your needs become our goals.

MySQL Tutorials

How to Install MySQL on Debian

How to Import and Export Databases in MySQL or MariaDB

How to Create a MySQL Database Dump

MySQL Data Types: Overview, Usage Examples & Best Practices

How to Show Users in MySQL

How to Secure MySQL Server

Creating an SSH Tunnel for MySQL Remote Access

How To Use Triggers in MySQL

The UPDATE Command: How to Modify Records in a MySQL Table

How to Find and Delete Duplicate Rows in MySQL with GROUP BY and HAVING Clauses

More cloud services from Hostman

Managed Databases

App Platform

Kubernetes

Firewall

Answers to Your Questions

MySQL hosting is a service that provides infrastructure and tools to run and manage MySQL databases online. It can be shared, VPS-based, or fully managed, depending on the level of control and automation you need.

You can manage MySQL hosting through a control panel, SSH access, or a managed service dashboard to handle users, backups, updates, and performance. For easier management, Hostman offers paid backups, monitoring, and security tools.

We support the two most popular and reliable versions: MySQL 5.7 and MySQL 8. These are trusted by developers worldwide and are fully optimized for cloud environments—whether you're running a small project or a production-grade application.

Hostman's MySQL databases run on top-tier Intel and AMD servers with lightning-fast NVMe storage. You’ll get data transfer speeds of 100–200 Mbps, and up to 1 Gbps inside private networks—perfect for anything from websites with steady traffic to demanding apps that need high-speed access and stability.

Yes. Hostman provides a secure managed MySQL database solution. We use Tier III data centers with ISO, PCI DSS, and GDPR compliance. Access to your cloud-hosted MySQL database is strictly controlled and customizable.

You can manage your MySQL cloud database using tools like Adminer or phpMyAdmin, or directly through the Hostman panel where you can monitor performance, manage access, and configure backups.

Absolutely. Our cloud SQL for MySQL hosting lets you scale CPU, RAM, and storage dynamically. You only pay for what you use, and scaling is done instantly via the dashboard.

Unlike traditional MySQL server hosting, MySQL cloud hosting lets you skip the setup and maintenance. With managed MySQL services from Hostman, infrastructure, updates, backups, and scaling are handled automatically.

Hostman provides free technical support for all MySQL hosting plans. You'll also have access to documentation, scaling recommendations, and real-time assistance.

Do you have questions,

comments, or concerns?

whether you need help or are just unsure of where to start.