Cloud Database

Hostman's commitment to simplicity

and budget-friendly solutions

monthly charges

monthly charges

base daily/weekly fee

monthly/hourly fee

10 TB / mo

Tailored database solutions for every need

MySQL

PostgreSQL

Redis

MongoDB

OpenSearch

ClickHouse

Kafka

RabbitMQ

What is Cloud Database?

Get started with Hostman

cloud database platform

for reliable, scalable cloud database solutions that

grow with your business.

Transparent pricing for your needs and predictable pricing

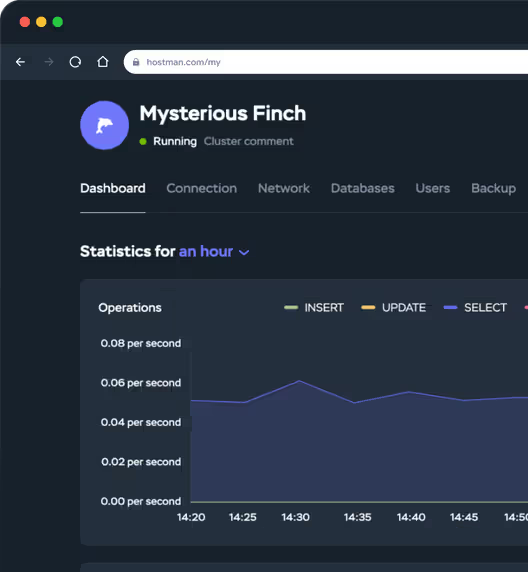

One panel to rule them all

Hear it from our users

"Hostman Comprehensive Review of Simplicity and Potential"

"A perfect fit for everything cloud services!"

"Superb User Experience"

"Streamlined Cloud Excellence!"

"Seamless and easy to use Hosting Solution for Web Applications"

"Availing Different DB Engine Services Provided by Hostman is Convenient for my Organization usecases"

"Hostman is a great fit for me"

"Perfect website"

"Simplifying Cloud Deployment with Strengths and Areas for Growth"

"Streamlined Deployment with Room for Improvement"

Ready to get started?

management can be with Hostman.

Start turning your ideas into solutions with Hostman products and services

Cloud Servers

Apps

Object Storage

Kubernetes

Trusted by 500+ companies and developers worldwide

Global network of Hostman's data centers

Explore more of Hostman cloud databases

Understanding the main() Function in Python

How to Set Up a Valheim Server

How to Monitor Apache Kafka

Sending and Applying Git Patches via Email – No GitHub Needed

Installing and Configuring Samba on Ubuntu 22.04

[Webinar] How Whitespots.io Cut Cloud Costs in Half

Unified Search Now Available in Hostman’s Control Panel

Introducing the Cloud Infrastructure Scheme

How Wrizy Saved 90% on Hosting with Hostman

Virtualization vs Containerization: What They Are and When to Use Each

Answers to Your Questions

Hostman Cloud Databases ensure top-tier security with encryption, access control, and regular audits—protecting your data from threats and unauthorized access.

Our cloud platform supports both relational (MySQL, PostgreSQL) and NoSQL (MongoDB, Redis) databases, offering full flexibility for any project type.

Yes, Hostman allows instant resource scaling—up or down—to meet your performance needs during high-load periods.

We offer automated backups, scheduled snapshots, and point-in-time recovery to ensure your data is always safe and easily restorable.

Hostman uses a pay-as-you-go pricing model—you only pay for the storage and computing power you actually consume.

No, our automated failover and rolling updates minimize downtime, keeping your cloud databases online and functional 24/7.

Absolutely. Our expert support and migration tools ensure smooth and low-downtime transfers from your current infrastructure.

Hostman provides 24/7 technical support for database performance, setup, troubleshooting, and optimization—real help when you need it.

Yes, you can easily connect third-party tools and services to extend the capabilities of your cloud database environment.

We use end-to-end encryption, both at rest and in transit, along with strict privacy controls—ensuring full compliance with global data protection standards.

Hostman Cloud Databases include real-time monitoring, performance tuning, and automated alerts—helping you maintain top database performance at all times.

Take your database

to the next level

to help you find the perfect solution for your business and

support you every step of the way.